Franck Djeumou

Assistant Professor

Mechanical, Aerospace, and Nuclear Engineering - djeumf2@rpi.edu

Rensselaer Polytechnic Institute (RPI)

Biography

I am a tenure-track Assistant Professor in the Mechanical, Aerospace, and Nuclear Engineering Department at Rensselaer Polytechnic Institute (RPI) as of Fall 2024.

My research group focuses on novel modeling and control approaches to enable autonomy in extreme, rare, and safety-critical scenarios. We are particularly interested in decision-making under uncertainty and limited data, as well as structured and safe learning on the fly with scarce information. One of the goals being to expand the safety envelope of today’s autonomous systems by enabling operation at the limits of what the (physical) systems can achieve. Examples of applications we are exploring include flight control under failure conditions, acrobatic flight maneuvers, agile robotics, or autonomous driving at the limits of vehicle handling capabilities.

- Learning for autonomy

- Stochastic modeling

- Optimal control

- Scientific machine learning

- Generative AI

-

Ph.D. in Electrical and Computer Eng, 2023

The University of Texas at Austin, US

-

B.S. and M.S. in Aerospace Eng, 2018

ISAE-SUPAERO, France

-

M.S. (COMASIC) in Computer Science, 2017

École Polytechnique, France

-

Class preparatory (junior undergraduate level) in Mathematics and Physics, 2014

Lycée Fénelon, France

Recent News

[4/7/25] Two papers (RL drifting and Risk-averse racing) accepted at ICRA 2025 and one paper at ICLR 2025.

[11/14/24] One of our initial submissions to ICRA 2025, Reference-Free Formula Drift with RL, was featured in the London-based magazine NewScientist.

[11/08/24] Our work on One Model to Drift Them All won an Outstanding Paper Award at the 2024 Conference on Robot Learning (CoRL).

[08/16/24] I joined RPI as an Assistant Professor in the Mechanical, Aerospace, and Nuclear Engineering Department.

Featured Publications

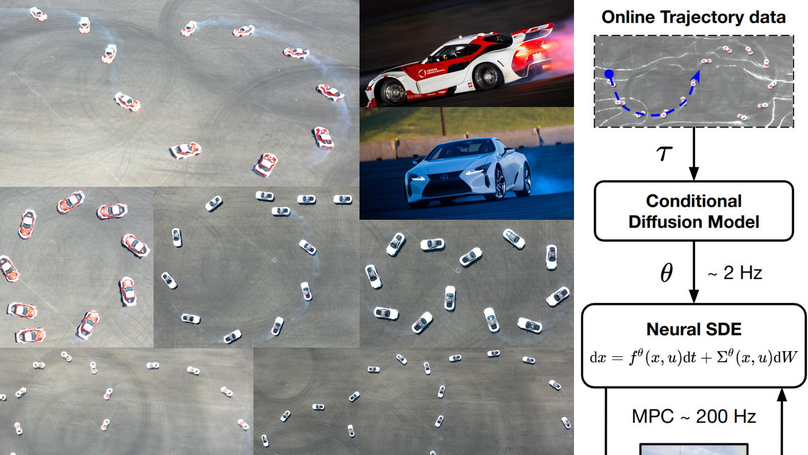

We propose a framework to learn a conditional diffusion model for high-performance vehicle control using an unlabelled dataset containing trajectories from distinct vehicles in different environments. By conditioning the generation process on online measurements, we integrate the diffusion model into a real-time model predictive control framework for driving at the limits, and test it on a Toyota GR Supra and Lexus LC 500 in various environments. We show that the model can adapt on the fly to a given vehicle and environment, paving the way towards a general, reliable method for autonomous driving at the limits of handling.

We present a framework and algorithms for learning uncertainty-aware models of controlled dynamical systems, that leverage a priori physics knowledge. Through experiments on a hexacopter and on other simulated robotic systems, we demonstrate that the proposed approach yields data-efficient models that generalize beyond the training dataset, and that these learned models result in performant model-based reinforcement learning and model predictive control policies. A journal version of this paper will be submitted soon with the open-source code.

We propose a data-efficient, learning-based control approach used on a Toyota Supra to achieve autonomous drifting on various trajectories. Experiments show that scarce amounts of driving data – less than three minutes – is sufficient to achieve high-performance drifting on various trajectories with speeds up to 45mph, and 4× improvement in tracking performance, smoother control inputs, and faster computation time compared to baselines.

We provide a case study for DaTaReach and DaTaControl, algorithms for reachability analysis and control of systems with a priori unknown nonlinear dynamics. In a scenario involving an F-16 aircraft diving towards the ground from low altitude and high downward pitch angle, we show how DaTaControl prevents a ground collision using only the measurements obtained during the dive and elementary laws of physics as side information.